Imagine a world where your applications can seamlessly converse with users, translate languages on the fly, and generate creative content with ease. This is the promise of Large Language Models (LLMs) like Google Gemini, OpenAI’s ChatGPT, and Anthropic’s Claude. But integrating these powerful AI tools isn’t without its challenges. Each LLM comes with its own API quirks, security protocols, and billing models. This complexity can quickly turn your AI dreams into an integration challenge.

That’s where Apigee, Google Cloud’s API Management Platform, comes to the rescue. Apigee acts as a unified gateway, simplifying interactions with multiple LLMs and providing a robust set of API management features to ensure security, scalability, and efficiency.

In this two-part blog post, we’ll explore the benefits of using Apigee as an LLM gateway and delve into a practical implementation. We’ll demonstrate how Apigee can streamline your AI journey, enhance security, and unlock the full potential of LLMs.

Why Use Apigee as an LLM Gateway?

As organizations increasingly leverage AI capabilities, managing interactions with multiple LLMs can become a daunting task. Each LLM presents unique challenges:

- API Differences: Each LLM has its own API with varying structures, authentication methods, and data formats.

- Security Protocols: Different LLMs may have different security requirements, making it difficult to ensure consistent protection.

- Rate Limits and Usage Policies: LLMs often have usage limits and rate restrictions, which can be challenging to manage across multiple providers.

- Cost Management: Each LLM has its own pricing model, making it crucial to track usage and optimize costs.

- Lack of Centralized Monitoring & Analytics: Without a central platform, it’s difficult to gain a comprehensive view of LLM usage, performance, and potential issues.

Apigee addresses these challenges by providing a centralized platform to manage, secure, and scale your LLM APIs. It acts as a single point of entry for all LLM interactions, simplifying development and reducing complexity.

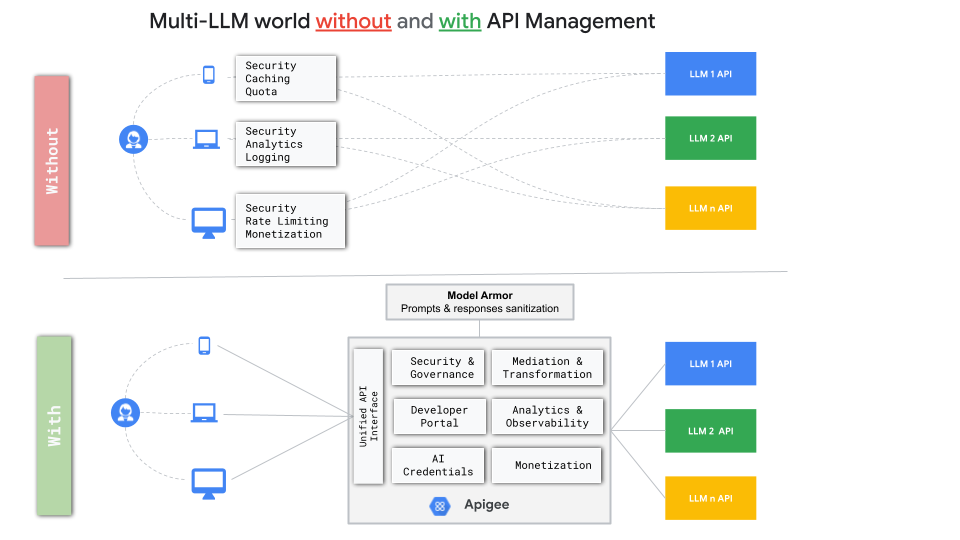

Without API management, integrating with multiple LLMs can lead to a tangled web of connections and potential redundancies. Each interaction requires separate handling of security, caching, analytics, and other essential functions.

Apigee streamlines this process by:

- Providing a unified API interface: Interact with all your LLMs through a single, consistent API.

- Enhancing security: Protect your LLM APIs with authentication, authorization, and prompt sanitization using the Google’s Model Armor.

- Managing API keys securely: Store LLM API keys securely within Apigee using an encrypted key value map.

- Implementing API management best practices: Leverage rate limiting, quotas, mediation, transformation, analytics, monetization, monitoring, and caching to optimize performance and control costs.

- Enabling comprehensive analytics and logging: Apigee provides detailed insights into API usage, performance, and error rates, allowing you to track LLM interactions, identify trends, and troubleshoot issues effectively. This includes tracking token consumption, latency, and error rates for each LLM.

- Supporting API monetization: Apigee enables you to monetize your LLM APIs by tracking usage, implementing tiered pricing plans, and integrating with billing systems. This allows you to generate revenue, control access, and ensure the long-term sustainability of your LLM-powered applications.

Practical Implementation: A Proof of Concept

To illustrate these benefits, I’ve developed a Proof of Concept (PoC) that showcases Apigee’s capabilities as an LLM gateway. This PoC focuses on a scenario where users need to access different LLMs for various tasks, such as text summarization, question answering, and code generation.

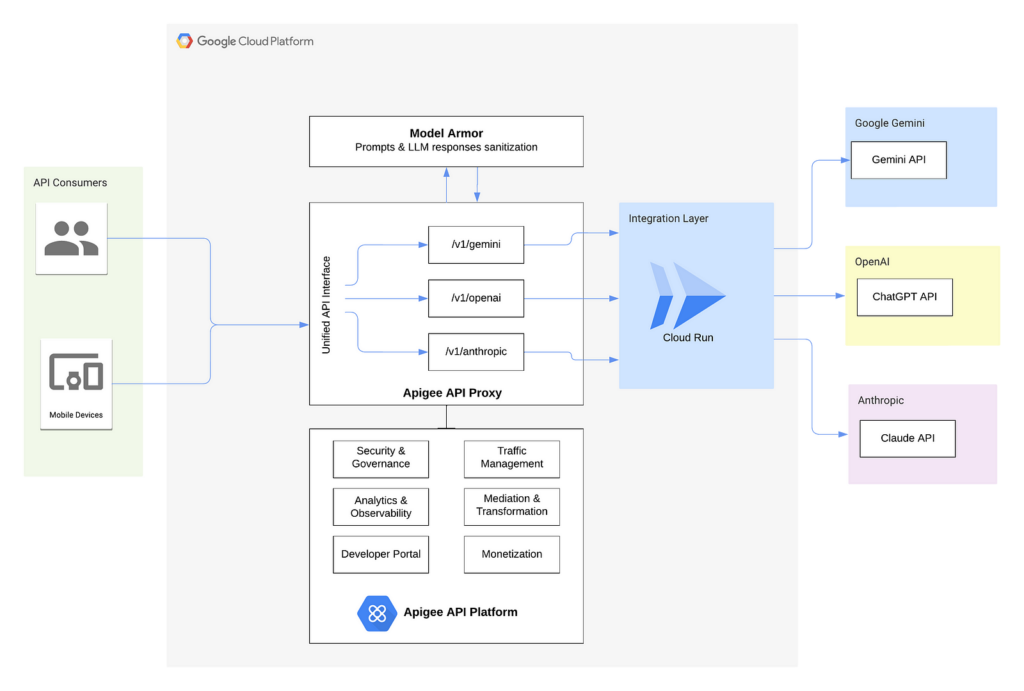

Our PoC architecture comprises:

- Streamlit Frontend: A simple user interface for interacting with the LLMs.

- Apigee API Management Platform: The secure gateway for all LLM requests and responses.

- Integration Layer on Google Cloud Run: A lightweight Python/Flask service that handles communication with the selected LLMs.

- Backend LLMs: Google Gemini, OpenAI’s ChatGPT, and Anthropic’s Claude.

Now let’s take a closer look at the main building blocks of our architecture.

User Interface

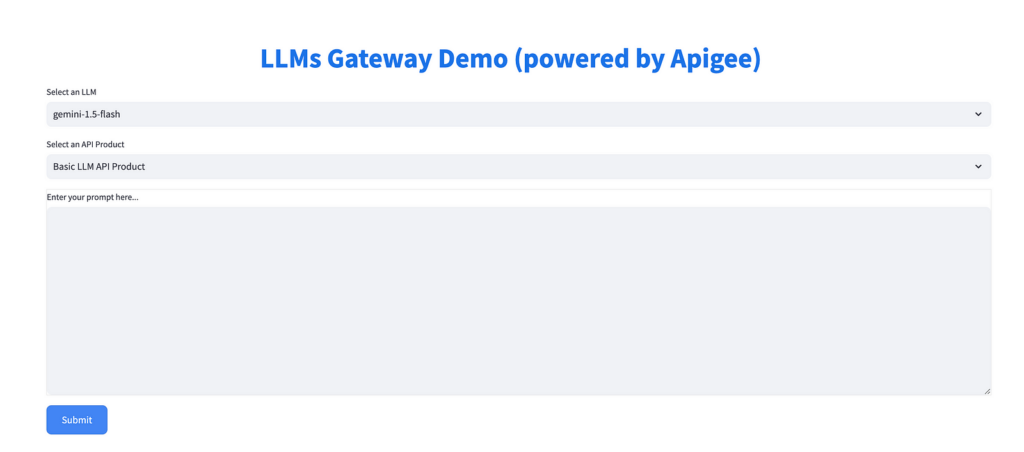

Our Streamlit frontend provides a simple and intuitive user experience:

- LLM Selection: Users can choose their preferred LLM (Gemini, ChatGPT, or Claude) from a dropdown menu.

- API Product Choice: Users can select between “Basic” and “Advanced” API products, each offering different features.

- Prompt Input: A text field allows users to enter their prompts for the LLM.

- Response Display: The LLM’s response is displayed clearly upon submission. In addition, we also display the number of tokens used.

API Products

We defined two API products in Apigee.

Basic API Product:

- Provides access to the LLMs through a secure API proxy deployed in Apigee.

- Implements rate limits to control the number of requests per time period.

- Sets quotas to limit total usage over a billing cycle.

Advanced API Product:

- Includes all features of the Basic API product.

- Adds prompt sanitization using Google’s Model Armor to filter potentially harmful or inappropriate content using a preconfigured template.

Integration Layer on Google Cloud Run

The integration layer, developed using Python/Flask and deployed on Google Cloud Run, plays a critical role in our architecture:

- Handles LLM Interactions: It receives requests from Apigee, extracts the necessary information (selected LLM, prompt, API key), and communicates with the chosen LLM using its specific API.

- Abstracts Backend Complexity: It hides the complexities of interacting with different LLM APIs, providing a unified interface for Apigee.

- Ensures Scalability and Maintainability: Deploying on Cloud Run allows the integration layer to scale automatically based on demand and simplifies deployment and maintenance.

Secure Storage of AI Credentials

Apigee securely stores the API keys for each LLM using an encrypted key value map. This centralized approach enhances security by:

- Preventing API key exposure: Developers and end-users don’t need to handle sensitive LLM API keys directly.

- Simplifying key management: API keys can be easily rotated or revoked within Apigee.

Benefits Highlighted by the Implementation

Implementing Apigee as an LLM gateway in this architecture brings theoretical concepts into a practical, functioning system. This not only validates the approach but also highlights several tangible benefits that enhance the overall solution.

Simplified User Experience for End-Users

- Ease of Use: End-users interact with a straightforward interface without worrying about underlying complexities.

- No Credential Management: End-users are not burdened with handling or securing LLM API keys.

- Choice and Flexibility: End-users can select the LLM that best fits their needs through the frontend application.

Streamlined Developer Experience

- Centralized Access: Developers interact with a single, unified API gateway rather than multiple LLM APIs.

- API Key Management: Developers obtain API keys from the Apigee Developer Portal, where the API products and documentation are published.

- Simplified Integration: Abstracts the complexities of different LLMs, allowing developers to focus on building applications.

Robust Security and Compliance

- Prompt Sanitization: Protects against inappropriate content and ensures compliance.

- Secure Access: Only authorized users and developers can access the APIs.

- Secure Credential Storage: API keys are securely stored and managed within Apigee, reducing risks.

Effective API Management

- Rate Limiting and Quotas: Prevents misuse and manages resource allocation.

- Caching: Optional caching can improve performance and reduce costs.

Detailed Analytics and Monetization

- Token Usage Tracking: Monitors the number of tokens consumed per request, providing insights into usage patterns and costs, which is essential for billing and cost optimization.

- Application Insights: Identifies which applications are using the most resources.

- Billing Support: The implementation of “Basic” and “Advanced” API products showcases how Apigee enables tiered pricing strategies, allowing you to offer different levels of service and features at various price points. This demonstrates how Apigee can support your monetization goals for LLM APIs.

Scalable and Maintainable Architecture

- Integration Layer on Cloud Run: Offers scalability and easy maintenance.

- Modular Design: Separates concerns between Apigee, the integration layer, and the frontend.

Conclusion

Integrating multiple Large Language Models into applications can be challenging due to differing APIs and security protocols. By leveraging Apigee as an LLM gateway, we can simplify this process, enhance security, and provide a unified interface.

Our practical implementation demonstrates how Apigee, combined with a Streamlit frontend and an integration layer on Google Cloud Run, offers a seamless and secure way to interact with various LLMs. This approach brings significant benefits:

- Simplified User Experience for End-Users: End-users can easily select and interact with different LLMs through the frontend application without managing API keys.

- Streamlined Developer Experience: Developers obtain API keys from the Apigee Developer Portal, where the API products are published. This centralized approach simplifies credential management and provides consistent access to multiple LLMs through a single gateway.

- Enhanced Security: Centralized credential management and prompt sanitization ensure robust security and compliance for both end-users and developers.

- Effective API Management: Features like rate limiting, quotas, and analytics optimize resource usage and enable monetization strategies, allowing you to generate revenue from your LLM APIs.

- Scalable Architecture: The modular design allows for easy scaling and maintenance, accommodating growth and evolving requirements.

In Part 2, we’ll dive into the technical details of implementing this proof of concept. We’ll cover setting up the Apigee environment, creating the unified API proxy, developing the integration layer on Cloud Run, and best practices for security and scalability. This comprehensive guide will equip you with the knowledge to build a powerful LLM gateway for your applications using Apigee.

Stay tuned for Part 2, where we’ll guide you through the step-by-step process of building this solution using Apigee and Google Cloud Run.

Note: This blog post was initially published in my Medium.com blog.

This is a great guide. When is part 2 coming?